2024년 6월 21일 금요일

Kafka Guarantees

- Messages are appended to a topic-partition in the order they are sent

- Consumers read messages in the order stored in a topic-partition

- With a replication factor of N, producers and consumers can tolerate up to N-I brokers being down

- This is why a replication factor of 3 is a good idea:

- Allows for on broker to be taken down for maintenance

- Allows for another broker to be taken down unexpectedly

- As long as the number of partitions remains constant for a topic(no new partitions), the same key will always go to the same partition

Zookeeper

- Zookeeper manages brokers (keeps a list of them)

- Zookeeper helps in performing leader election for partitions

- Zookeeper sends notifications to Kafka in case of changes (e.g. new topic, broker dies, broker comes up, delete topics, etc...)

- Kafka can't work without Zookeeper

- Zookeeper by design operates with an odd number of servers (3, 5, 7)

- Zookeeper has a leader (handle writes) the rest of the servers are followers (handle reads)

- (Zokeeper does NOT store consumer offsets with Kafka > v0.10)

Kafka Broker Discovery

- Every Kafka broker is also called a "bootstrap server"

- That means that you only need to connect to one broker, and you will be connected to the entire cluster.

- Each broker knows about all brokers, topics and partitions(metadata)

Delivery semantics for consumers

- Consuemers choose when to commit offsets.

- There are 3 delivery sematics:

- At most once:

- offsets are committed as soon as the message is received.

- If the processing goes wrong, the message will be lost(it won't be read again).

- At least once(usually preferred):

- offsets are committed after the message is processed.

- If the processing goes wrong, the message will be read again.

- This can result in duplicate processing of messages. Make sure your processing is idempotent (i.e. processing again the messages won't impact your systems)

- Exactly once:

- Can be achieved for Kafka => Kafka workflows using Kafka Streams API

- For Kafka => External System workflows, use an idempotent consumer.

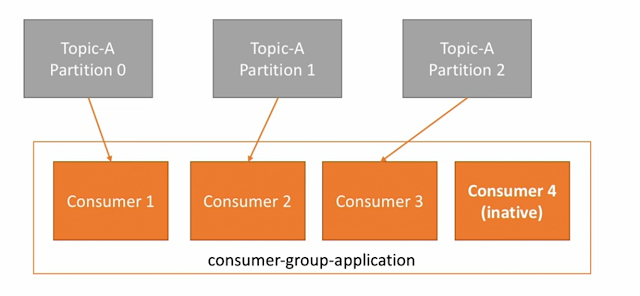

Consumer Offsets

- Kafka stores the offsets at which a consumer group has been reading

- The offsets committed live in a Kafka topic named __consumer_offsets

- When a consumer in a group has processed data received from Kafka, it should be committing tghe offsets

- If a consumer dies, it will be a able to read back from where it left off thanks to the committed consumer offsets!