1. Augment the file handle limits

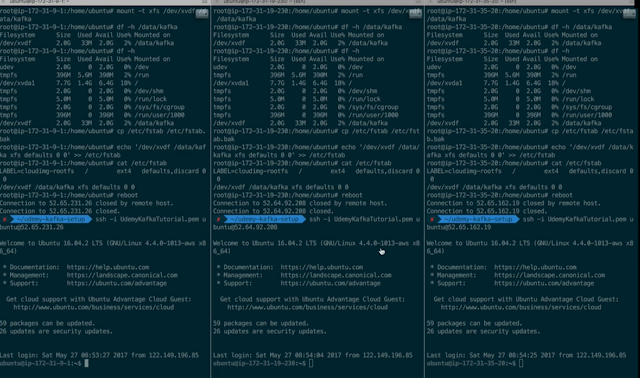

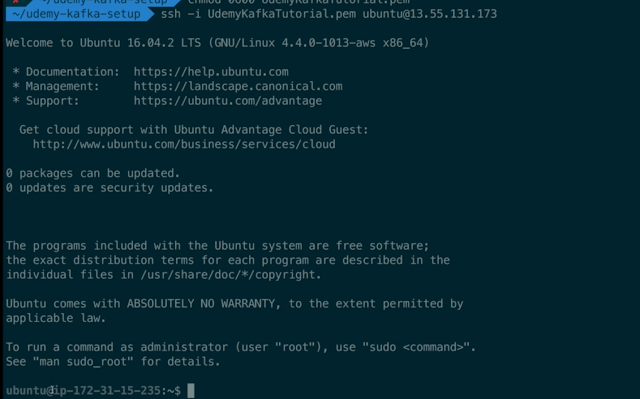

2. Launch Kafka on one machine

3. Setup Kafka as a Service

#!/bin/bash

# Add file limits configs - allow to open 100,000 file descriptors

echo "* hard nofile 100000

* soft nofile 100000" | sudo tee --append /etc/security/limits.conf

# reboot for the file limit to be taken into account

sudo reboot

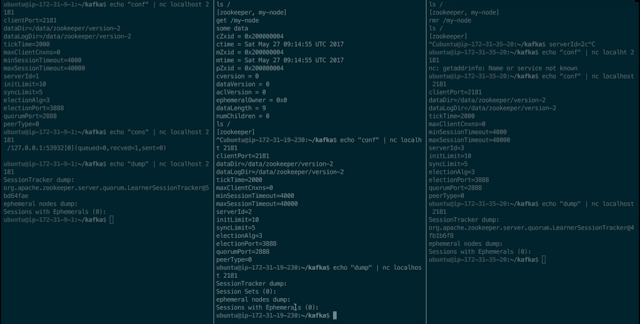

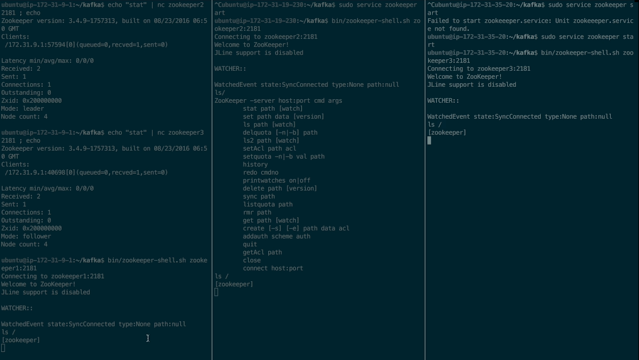

sudo service zookeeper start

sudo chown -R ubuntu:ubuntu /data/kafka

# edit kafka configuration

rm config/server.properties

nano config/server.properties

# launch kafka

bin/kafka-server-start.sh config/server.properties

# Install Kafka boot scripts

sudo nano /etc/init.d/kafka

sudo chmod +x /etc/init.d/kafka

sudo chown root:root /etc/init.d/kafka

# you can safely ignore the warning

sudo update-rc.d kafka defaults

# start kafka

sudo service kafka start

# verify it's working

nc -vz localhost 9092

# look at the server logs

cat /home/ubuntu/kafka/logs/server.log

# create a topic

bin/kafka-topics.sh --zookeeper zookeeper1:2181/kafka --create --topic first_topic --replication-factor 1 --partitions 3

# produce data to the topic

bin/kafka-console-producer.sh --broker-list kafka1:9092 --topic first_topic

hi

hello

(exit)

# read that data

bin/kafka-console-consumer.sh --bootstrap-server kafka1:9092 --topic first_topic --from-beginning

# list kafka topics

bin/kafka-topics.sh --zookeeper zookeeper1:2181/kafka --list

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=1

# change your.host.name by your machine's IP or hostname

advertised.listeners=PLAINTEXT://kafka1:9092

# Switch to enable topic deletion or not, default value is false

delete.topic.enable=true

############################# Log Basics #############################

# A comma seperated list of directories under which to store log files

log.dirs=/data/kafka

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=8

# we will have 3 brokers so the default replication factor should be 2 or 3

default.replication.factor=3

# number of ISR to have in order to minimize data loss

min.insync.replicas=1

############################# Log Retention Policy #############################

# The minimum age of a log file to be eligible for deletion due to age

# this will delete data after a week

log.retention.hours=168

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=zookeeper1:2181,zookeeper2:2181,zookeeper3:2181/kafka

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

############################## Other ##################################

# I recommend you set this to false in production.

# We'll keep it as true for the course

auto.create.topics.enable=true

#!/bin/bash

#/etc/init.d/kafka

DAEMON_PATH=/home/ubuntu/kafka/bin

DAEMON_NAME=kafka

# Check that networking is up.

#[ ${NETWORKING} = "no" ] && exit 0

PATH=$PATH:$DAEMON_PATH

# See how we were called.

case "$1" in

start)

# Start daemon.

pid=`ps ax | grep -i 'kafka.Kafka' | grep -v grep | awk '{print $1}'`

if [ -n "$pid" ]

then

echo "Kafka is already running"

else

echo "Starting $DAEMON_NAME"

$DAEMON_PATH/kafka-server-start.sh -daemon /home/ubuntu/kafka/config/server.properties

fi

;;

stop)

echo "Shutting down $DAEMON_NAME"

$DAEMON_PATH/kafka-server-stop.sh

;;

restart)

$0 stop

sleep 2

$0 start

;;

status)

pid=`ps ax | grep -i 'kafka.Kafka' | grep -v grep | awk '{print $1}'`

if [ -n "$pid" ]

then

echo "Kafka is Running as PID: $pid"

else

echo "Kafka is not Running"

fi

;;

*)

echo "Usage: $0 {start|stop|restart|status}"

exit 1

esac

exit 0